While many enterprises across a wide range of verticals—finance, healthcare, technology—build applications on the modern data stack, some are not fully aware of the application performance management (APM) and operational challenges that often arise. Over the course of a two-part blog series, we’ll address the requirements at both the individual application level, as well as holistic clusters and workloads, and explore what type of architecture can provide automated solutions for these complex environments.

The Modern Data Stack and Operational Performance Requirements

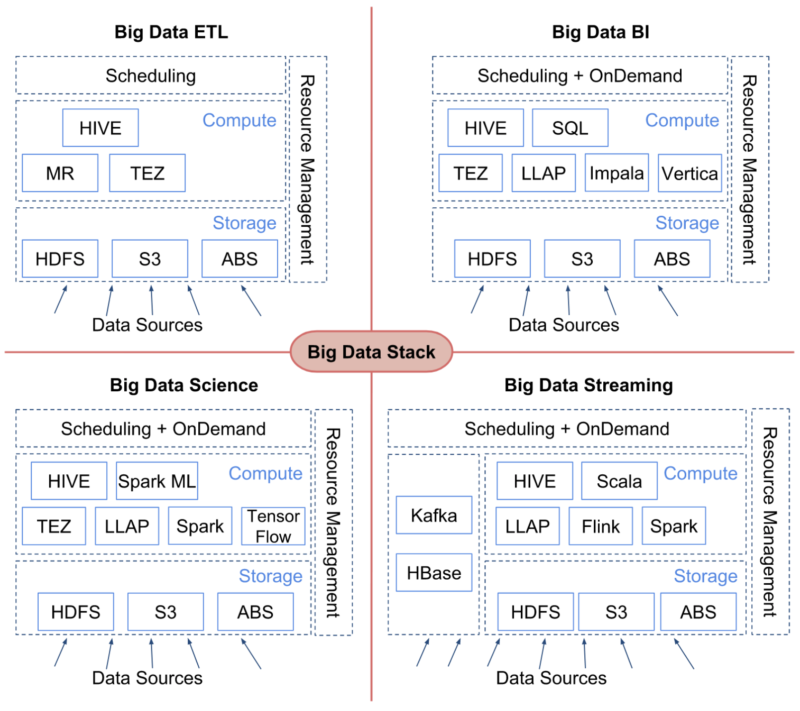

Composed of multiple distributed systems, the modern data stack in an enterprise typically goes through the following evolution:

- Big data ETL: storage systems, such as Azure Blob Store (ABS), house the large volumes of structured, semi-structured and unstructured data. Distributed processing engines, like MapReduce, come in for data extraction, cleaning and transformation of the data.

- Big data BI: MPP SQL systems, such as Impala, are added to the stack, which power the interactive SQL queries that are common in BI workloads.

- Big data science: with maturity of use of a modern data stack, more workloads leverage machine learning (ML) and artificial intelligence (AI).

- Big data streaming: Systems such as Kafka are added to the modern data stack to support applications that ingest and process data in a continuous streaming fashion.

Evolution of the modern data stack in an enterprise

Industry analysts estimate that there are more than 10,000 enterprises worldwide running applications in production on a modern data stack, comprised of three or more distributed systems.

Naturally, performance challenges are inherent related to failure, speed, SLA or behavior. Typical questions that are nontrivial to answer include:

- What caused an application to fail and how can I fix it?

- This application seems to have made little progress in the last hour. Where is it stuck?

- Will this application ever finish, or will it finish in a reasonable time?

- Will this application meet its SLA?

- Does this application behave differently than in the past? If so, in what way and why?

- Is this application causing problems in my cluster?

- Is the performance of this application being affected by one or more other applications?

Many operational performance requirements are needed at the “macro” level compared to the level of individual applications. These include:

- Configuring resource allocation policies to meet SLAs in multi-tenant clusters

- Detecting rogue applications that can affect the performance of SLA-bound applications through a variety of low-level resource interactions

- Configuring the hundreds of configuration settings that distributed systems are notoriously known for having in order to get the desired performance

- Tuning data partitioning and storage layout

- Optimizing dollar costs on the cloud

- Capacity planning using predictive analysis in order to account for workload growth proactively

The architecture of a Performance Management Solution

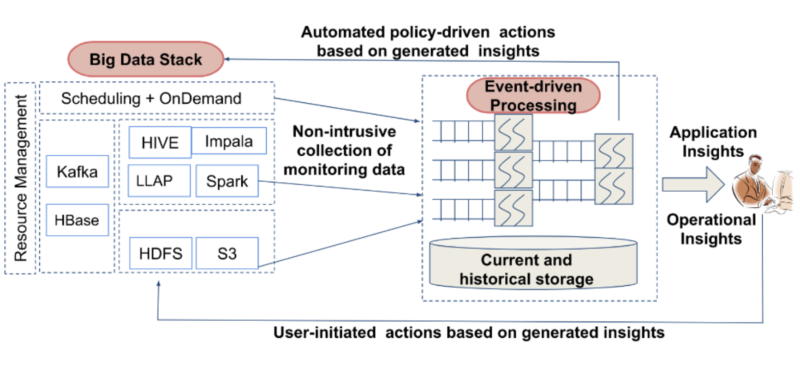

Addressing performance challenges requires a sophisticated architecture that includes the following components:

- Full data stack collection: to answer questions like what caused this application to fail or will this application ever meet its SLA, monitoring data will be needed from every level of the stack. However, collecting such data in a non-intrusive or low-overhead manner from production clusters is a major technical challenge.

- Event-driven data processing: deployments can generate tens of terabytes of logs and metrics every day, which can present variety and consistency challenges, thus calling for the data processing layer to be based on event-driven processing algorithms whose outputs converge to the same final state irrespective of the timeliness and order in which the monitoring data arrives.

- Machine learning-driven insights and policy-driven actions: Enabling all of the monitoring data to be collected and stored in a single place allows for statistical analysis and machine learning to be applied to the data. Such algorithms can generate insights that can then be applied to address the performance requirements.

Architecture of a performance management platform for Modern data applications

There are several technical challenges involved in this process. Examples include:

- What is the optimal way to correlate events across independent systems? In other words, if an application accesses multiple systems and the events pass through them, how end-to-end correlation can be done?

- How can we deal with telemetry noise and data quality issues which are common in such complex environments? For example, records from multiple systems may not be completely aligned and may not be standardized.

- How can we train ML models in closed-loop, complex deployments? How can we split training and test data?

Now that we’ve tackled the modern data stack and reviewed operational challenges and requirements for a performance management solution to meet those needs, we look forward to discussing the features that a solution should offer in our next post.