The Complexities of Code Optimization in BigQuery: Problems, Challenges and Solutions

Google BigQuery offers a powerful, serverless data warehouse solution that can handle massive datasets with ease. However, this power comes with its own set of challenges, particularly when it comes to code optimization.

This blog post delves into the complexities of code optimization in BigQuery, the difficulties in diagnosing and resolving issues, and how automated solutions can simplify this process.

The BigQuery Code Optimization Puzzle

1. Query Performance and Cost Management

Problem: In BigQuery, query performance and cost are intimately linked. Inefficient queries can not only be slow but also extremely expensive, especially when dealing with large datasets.

Diagnosis Challenge: Identifying the root cause of a poorly performing and costly query is complex. Is it due to inefficient JOIN operations, suboptimal table structures, or simply the sheer volume of data being processed? BigQuery provides query explanations, but interpreting these for complex queries and understanding their cost implications requires significant expertise.

Resolution Difficulty: Optimizing BigQuery queries often involves a delicate balance between performance and cost. Techniques like denormalizing data might improve query speed but increase storage costs. Each optimization needs to be carefully evaluated for its impact on both performance and billing, which can be a time-consuming and error-prone process.

2. Partitioning and Clustering Challenges

Problem: Improper partitioning and clustering can lead to excessive data scanning, resulting in slow queries and unnecessary costs.

Diagnosis Challenge: The effects of suboptimal partitioning and clustering may not be immediately apparent and can vary depending on query patterns. Identifying whether poor performance is due to partitioning issues, clustering issues, or something else entirely requires analyzing query patterns over time and understanding the intricacies of BigQuery’s architecture.

Resolution Difficulty: Changing partitioning or clustering strategies is not a trivial operation, especially for large tables. It requires careful planning and can temporarily impact query performance during the restructuring process. Determining the optimal strategy often requires extensive A/B testing and monitoring across various query types and data sizes.

3. Nested and Repeated Fields Complexity

Problem: While BigQuery’s support for nested and repeated fields offers flexibility, it can lead to complex queries that are difficult to optimize and prone to performance issues.

Diagnosis Challenge: Understanding the performance characteristics of queries involving nested and repeated fields is like solving a multidimensional puzzle. The query explanation may not provide clear insights into how these fields are being processed, making it difficult to identify bottlenecks.

Resolution Difficulty: Optimizing queries with nested and repeated fields often requires restructuring the data model or rewriting queries in non-intuitive ways. This process can be time-consuming and may require significant changes to ETL processes and downstream analytics.

4. UDF and Stored Procedure Performance

Problem: User-Defined Functions (UDFs) and stored procedures in BigQuery can lead to unexpected performance issues if not implemented carefully.

Diagnosis Challenge: The impact of UDFs and stored procedures on query performance isn’t always clear from the query explanation. Identifying whether these are the source of performance issues requires careful analysis and benchmarking.

Resolution Difficulty: Optimizing UDFs and stored procedures often involves rewriting them from scratch or finding ways to eliminate them altogether. This can be a complex process, especially if these functions are widely used across your BigQuery projects.

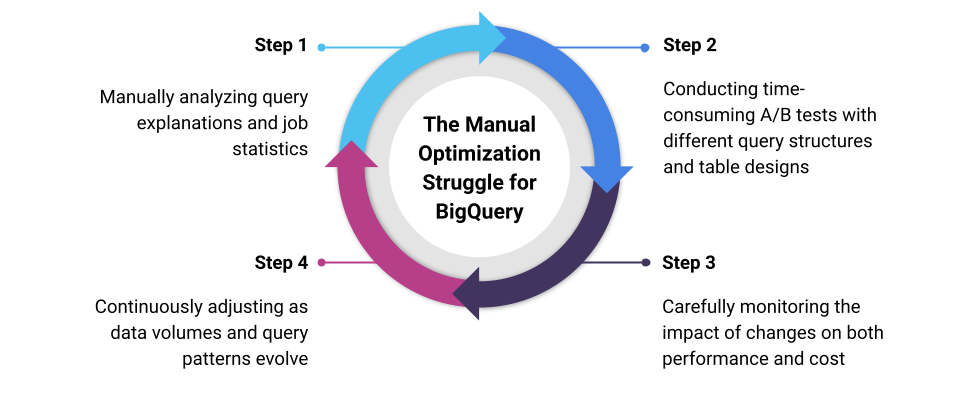

Manual Optimization Struggle

Traditionally, addressing these challenges involves a cycle of:

1. Manually analyzing query explanations and job statistics

2. Conducting time-consuming A/B tests with different query structures and table designs

3. Carefully monitoring the impact of changes on both performance and cost

4. Continuously adjusting as data volumes and query patterns evolve

This process is not only time-consuming but also requires deep expertise in BigQuery’s architecture, SQL optimization techniques, and cost management strategies. Even then, optimizations that work today might become inefficient as your data and usage patterns change.

Harnessing Automation for BigQuery Optimization

Given the complexities and ongoing nature of these challenges, many organizations are turning to automated solutions to streamline their BigQuery optimization efforts. Tools like Unravel can help by:

Continuous Performance and Cost Monitoring: Automatically tracking query performance, resource utilization, and cost metrics across your entire BigQuery environment.

Intelligent Query Analysis: Using machine learning algorithms to identify patterns and anomalies in query performance and cost that might be missed by manual analysis.

Root Cause Identification: Quickly pinpointing the source of performance issues, whether they’re related to query structure, data distribution, or BigQuery-specific features like partitioning and clustering.

Optimization Recommendations: Providing actionable suggestions for query rewrites, partitioning and clustering strategies, and cost-saving measures.

Impact Prediction: Estimating the potential performance and cost impacts of suggested changes before you implement them.

Automated Policy Enforcement: Helping enforce best practices and cost controls automatically across your BigQuery projects.

By leveraging such automated solutions, data teams can focus their expertise on deriving insights from data while ensuring their BigQuery environment remains optimized and cost-effective. Instead of spending hours digging through query explanations and job statistics, teams can quickly identify and resolve issues, or even prevent them from occurring in the first place.

Conclusion

Code optimization in BigQuery is a complex, ongoing challenge that requires continuous attention and expertise. While the problems are multifaceted and the manual diagnosis and resolution process can be daunting, automated solutions offer a path to simplify and streamline these efforts. By leveraging such tools, organizations can more effectively manage their BigQuery performance and costs, improve query efficiency, and allow their data teams to focus on delivering value rather than constantly grappling with optimization challenges.

Remember, whether you’re using manual methods or automated tools, optimization in BigQuery is an ongoing process. As your data volumes grow and query patterns evolve, staying on top of performance and cost management will ensure that your BigQuery implementation continues to deliver the insights your business needs, efficiently and cost-effectively.

To learn more about how Unravel can help with BigQuery code optimization, request a health check report, view a self-guided product tour, or request a demo.