In the world of big data, configuration management is often the unsung hero of platform performance and cost-efficiency. Whether you’re working with Snowflake, Databricks, BigQuery, or any other modern data platform, effective configuration management can mean the difference between a sluggish, expensive system and a finely-tuned, cost-effective one.

This blog post explores the complexities of configuration management in data platforms, the challenges in optimizing these settings, and how automated solutions can simplify this critical task.

The Configuration Conundrum

1. Cluster and Warehouse Sizing

Problem: Improper sizing of compute resources (like Databricks clusters or Snowflake warehouses) can lead to either performance bottlenecks or unnecessary costs.

Diagnosis Challenge: Determining the right size for your compute resources is not straightforward. It depends on workload patterns, data volumes, and query complexity, all of which can vary over time. Identifying whether performance issues or high costs are due to improper sizing requires analyzing usage patterns across multiple dimensions.

Resolution Difficulty: Adjusting resource sizes often involves a trial-and-error process. Too small, and you risk poor performance; too large, and you’re wasting money. The impact of changes may not be immediately apparent and can affect different workloads in unexpected ways.

2. Caching and Performance Optimization Settings

Problem: Suboptimal caching strategies and performance settings can lead to repeated computations and slow query performance.

Diagnosis Challenge: The effectiveness of caching and other performance optimizations can be highly dependent on specific workload characteristics. Identifying whether poor performance is due to cache misses, inappropriate caching strategies, or other factors requires deep analysis of query patterns and platform-specific metrics.

Resolution Difficulty: Tuning caching and performance settings often requires a delicate balance. Aggressive caching might improve performance for some queries while causing staleness issues for others. Each adjustment needs to be carefully evaluated across various workload types.

3. Security and Access Control Configurations

Problem: Overly restrictive security settings can hinder legitimate work, while overly permissive ones can create security vulnerabilities.

Diagnosis Challenge: Identifying the root cause of access issues can be complex, especially in platforms with multi-layered security models. Is a performance problem due to a query issue, or is it because of an overly restrictive security policy?

Resolution Difficulty: Adjusting security configurations requires careful consideration of both security requirements and operational needs. Changes need to be thoroughly tested to ensure they don’t inadvertently create security holes or disrupt critical workflows.

4. Cost Control and Resource Governance

Problem: Without proper cost control measures, data platform expenses can quickly spiral out of control.

Diagnosis Challenge: Understanding the cost implications of various platform features and usage patterns is complex. Is a spike in costs due to inefficient queries, improper resource allocation, or simply increased usage?

Resolution Difficulty: Implementing effective cost control measures often involves setting up complex policies and monitoring systems. It requires balancing cost optimization with the need for performance and flexibility, which can be a challenging trade-off to manage.

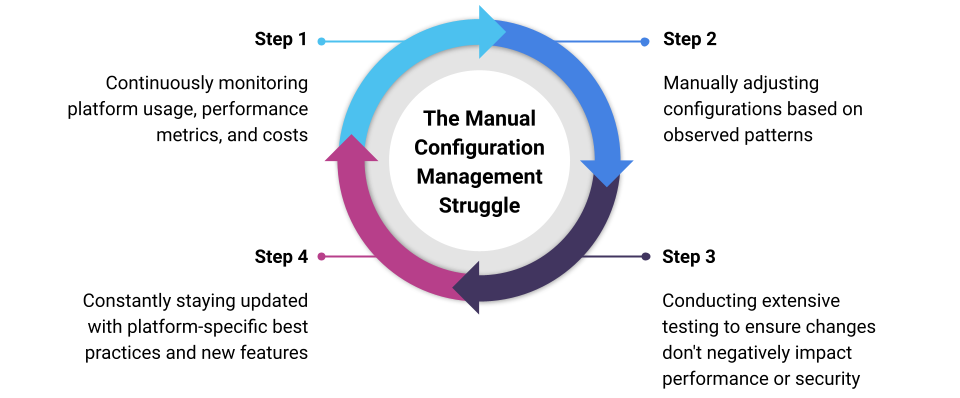

The Manual Configuration Management Struggle

Traditionally, managing these configurations involves:

1. Continuously monitoring platform usage, performance metrics, and costs

2. Manually adjusting configurations based on observed patterns

3. Conducting extensive testing to ensure changes don’t negatively impact performance or security

4. Constantly staying updated with platform-specific best practices and new features

5. Repeating this process as workloads and requirements evolve

This approach is not only time-consuming but also reactive. By the time an issue is noticed and diagnosed, it may have already impacted performance or inflated costs. Moreover, the complexity of modern data platforms means that the impact of configuration changes can be difficult to predict, leading to a constant cycle of tweaking and re-adjusting.

Embracing Automation in Configuration Management

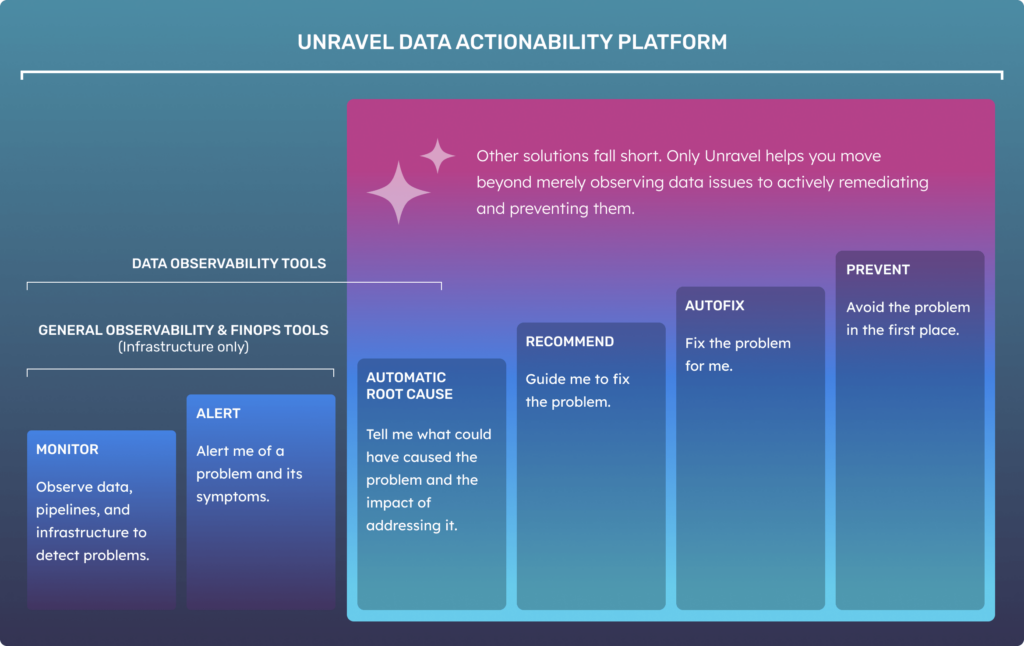

Given these challenges, many organizations are turning to automated solutions to manage and optimize their data platform configurations. Platforms like Unravel can help by:

Continuous Monitoring: Automatically tracking resource utilization, performance metrics, and costs across all aspects of the data platform.

Intelligent Analysis: Using machine learning to identify patterns and anomalies in platform usage and performance that might indicate configuration issues.

Predictive Optimization: Suggesting configuration changes based on observed usage patterns and predicting their impact before implementation.

Automated Adjustment: In some cases, automatically adjusting configurations within predefined parameters to optimize performance and cost.

Policy Enforcement: Helping to implement and enforce governance policies consistently across the platform.

Cross-Platform Optimization: For organizations using multiple data platforms, providing a unified view and consistent optimization approach across different environments.

By leveraging automated solutions, data teams can shift from a reactive to a proactive configuration management approach. Instead of constantly fighting fires, teams can focus on strategic initiatives while ensuring their data platforms remain optimized, secure, and cost-effective.

Conclusion

Configuration management in modern data platforms is a complex, ongoing challenge that requires continuous attention and expertise. While the problems are multifaceted and the manual management process can be overwhelming, automated solutions offer a path to simplify and streamline these efforts.

By embracing automation in configuration management, organizations can more effectively optimize their data platform performance, enhance security, control costs, and free up their data teams to focus on extracting value from data rather than endlessly tweaking platform settings.

Remember, whether using manual methods or automated tools, effective configuration management is an ongoing process. As your data volumes grow, workloads evolve, and platform features update, staying on top of your configurations will ensure that your data platform continues to meet your business needs efficiently and cost-effectively.

To learn more about how Unravel can help manage and optimize your data platform configurations with Databricks, Snowflake, and BigQuery: request a health check report, view a self-guided product tour, or request a demo.