As most organizations that have started to run a lot of data applications and pipelines in the cloud have found out, it’s really easy for things to get really expensive, really fast. It’s not unusual to see monthly budget overruns of 40% or more, or for companies to have burned through their three-year data cloud budget by early in year two. Consequently, we’re seeing that cloud migration projects and modernization initiatives are stalling out. Plans to scale up usage of modern platforms (think: Databricks and Snowflake, Amazon EMR, Google BigQuery and Dataproc, Azure) are hitting a wall.

Cloud bills are usually an organization’s biggest IT expense, and the sheer massive size of data workloads is driving most of the cloud bill. Many companies are feeling ambushed by their monthly cloud bills, and simply understanding where the money is going is a challenge—let alone figuring out where and how to rein in those costs and keeping them under control. Capacity/budget forecasting becomes a guessing game. There’s a general lack of individual accountability for waste/abuse of cloud resources. It feels like the Wild West, where everybody is spinning up instances left and right, without enough governance or control over how cloud resources are being used.

Enter the emerging discipline of FinOps. Sometimes called cloud cost management or cloud optimization, FinOps is an evolving cloud financial management practice that, in the words of the FinOps Foundation, “enables organizations to get maximum business value by helping engineering, finance, technology and business teams to collaborate on data-driven spending decisions.”

The principles behind the FinOps lifecycle

It’s important to bear in mind that a FinOps approach isn’t just about slashing costs—although you almost invariably will wind up saving money. It’s about empowering data engineers and business teams to make better choices about their cloud usage and derive the most value from their modern data stack investments.

Controlling costs consists of three iterative phases along a FinOps lifecycle:

- Observability: Getting visibility into where the money is going, measuring what’s happening in your cloud environment, understanding what’s going on in a “workload-aware” context

- Optimization: Seeing patterns emerge where you can eliminate waste, removing inefficiencies, actually making things better

- Governance: Going from reactive problem-solving to proactive problem-preventing, sustaining iterative improvements, automating guardrails, enabling self-service optimization

Each phase builds upon the former, to create a virtuous cycle of continuous improvement and empowerment for individual team members—regardless of expertise—to make better decisions about their cloud usage while still hitting their SLAs. In essence, this shifts the budget left, pulling accountability for controlling costs forward.

How Unravel puts FinOps lifecycle principles into practice

Unravel helps turn this conceptual FinOps framework into practical cost governance reality. You need four things to make this FinOps lifecycle work—all of which Unravel is uniquely able to provide:

- You need to capture the right kind of details at a highly granular level from all the various systems in your data stack—horizontally and vertically—from the application down to infrastructure and everything in between.

- All this deep information needs to be correlated into a “workload-aware” business context—cost governance is not just an infrastructure issue, and you need to get a holistic understanding of how everything works together: apps, pipelines, users, data, as well as infrastructure resources.

- You need to be able to automatically identify opportunities to optimize—oversized resources, inefficient code, data tiering—and then make it easy for engineers to implement those optimizations.

- Go from reactive to proactive—not just perpetually scan the horizon for optimization opportunities and respond to them after the fact, but leverage AI to predict capacity needs accurately, implement automated governance guardrails to keep things under control, and even launch corrective actions automatically.

Observability: Understanding costs in context

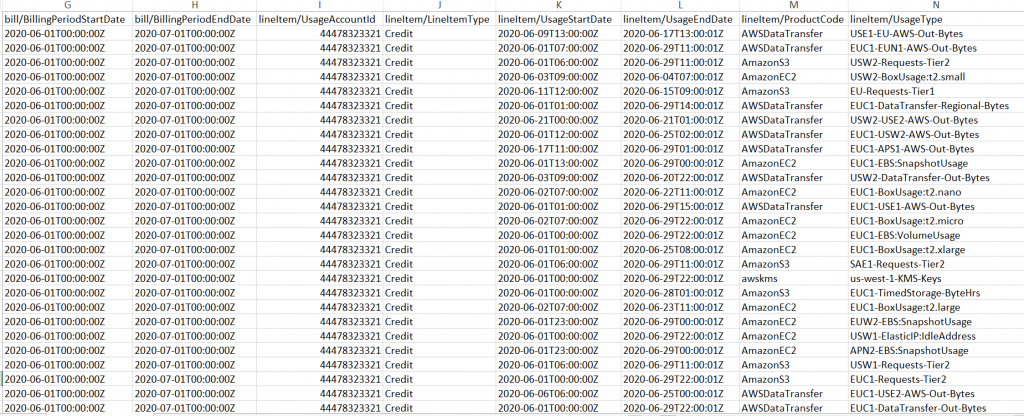

The first step to controlling costs is to understand what’s happening in your cloud environment—who’s spending what, where, why. The key here is to measure everything with precision and in context. Cloud vendor billing consoles (and third-party cost management tools) give you only an aggregated view of total spend on various services (compute, storage, platform), and monthly cloud bills can be pretty opaque with hundreds of individual line items that, again, don’t go any deeper than service type. There’s nothing that tells you which applications are running, who’s submitting them, which datasets are actually touched, and other contextual details.

To counter such obscurity, many organizations employ some sort of cost-allocation tagging taxonomy to categorize and track resource usage. If you already have such a classification in place, Unravel can easily adopt it without having to reinvent the wheel. If you don’t, it’s simple to implement such an approach in Unravel.

Two things to consider when looking at tagging capabilities:

- How deep and atomic is the tagging?

- What’s the frequency?

Unravel lets you apply tags at a highly granular level: by department, team, workload, application, even down to the individual job (or sub-part of a job) or specific user. And it happens in real time—you don’t have to wait around for the end of the monthly billing cycle.

These twin capabilities of capturing and correlating highly granular details and delivering real-time information are not merely nice-to-haves, but must-haves when it comes to the practical implementation of the FinOps lifecycle framework. They enable Unravel to:

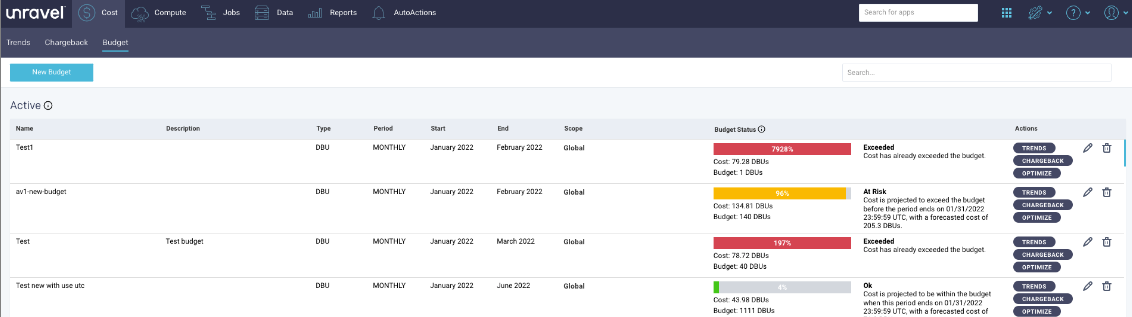

- Track actual spend vs. budget. Know ahead of time whether workload, user, cluster, etc., usage is projected to be on target, is at risk, or has already gone over budget. Preemptively prevent budget overruns rather than getting hit with sticker shock at the end of the month.

Check out the 90-second Automated Budget Tracking interactive demo

Check out the 90-second Automated Budget Tracking interactive demo

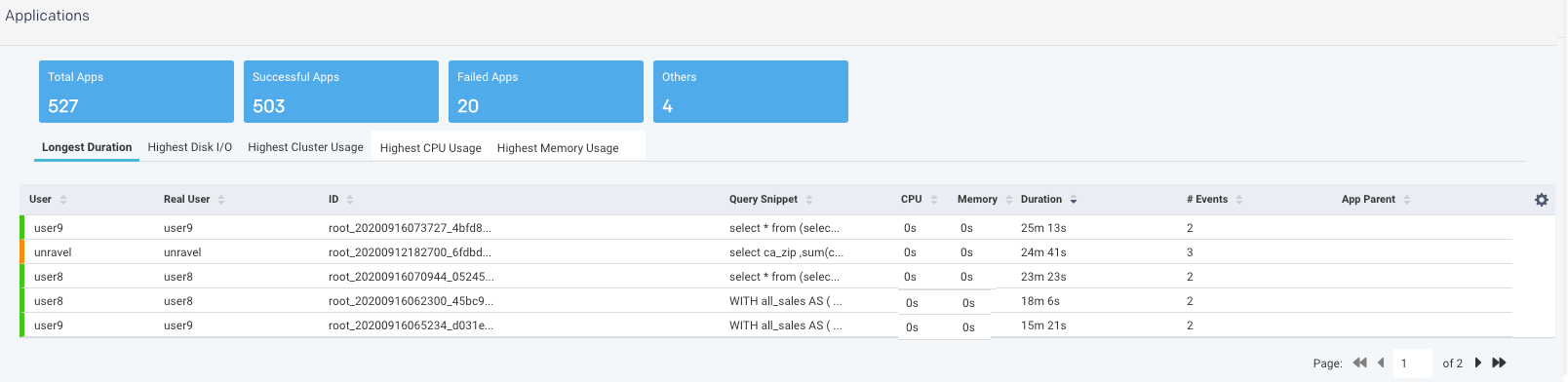

- Identify the big spenders. Know which projects, teams, applications, tables, queue, users, etc. are consuming the most cloud resources.

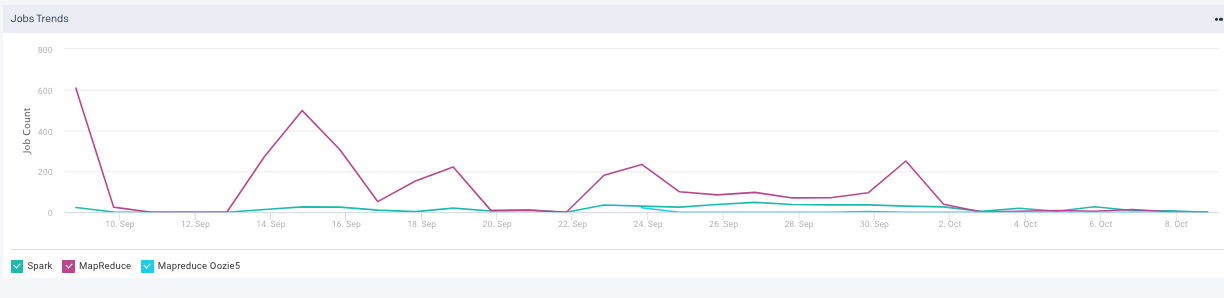

- Understand trends and patterns. Visualize how the cost of clusters changes over time. Understand seasonality-driven peaks and troughs—are you using a ton of resources only on Saturday night? Same time every day? When is there quiet time?—to identify opportunities for improvement.

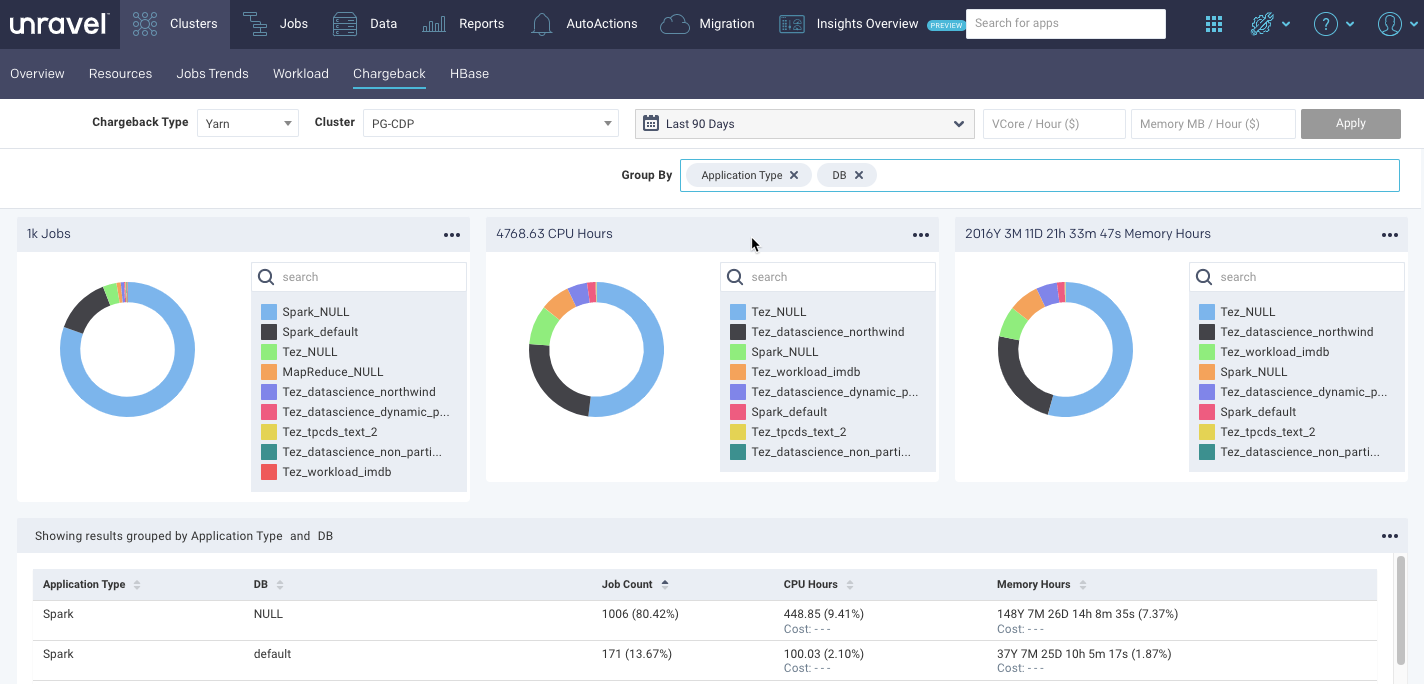

- Implement precise chargebacks/showbacks. Automatically generate cost-allocation reports down to the penny. Pinpoint who’s spending how much, where, and why. Because Unravel captures all the deep, nitty-gritty details about what’s running across your data stack—horizontally and vertically—and correlates them all in a “workload-aware” business context, you can finally solve the problem of allocating costs of shared resources.

Check out the 90-second Chargeback Report interactive demo

Check out the 90-second Chargeback Report interactive demo

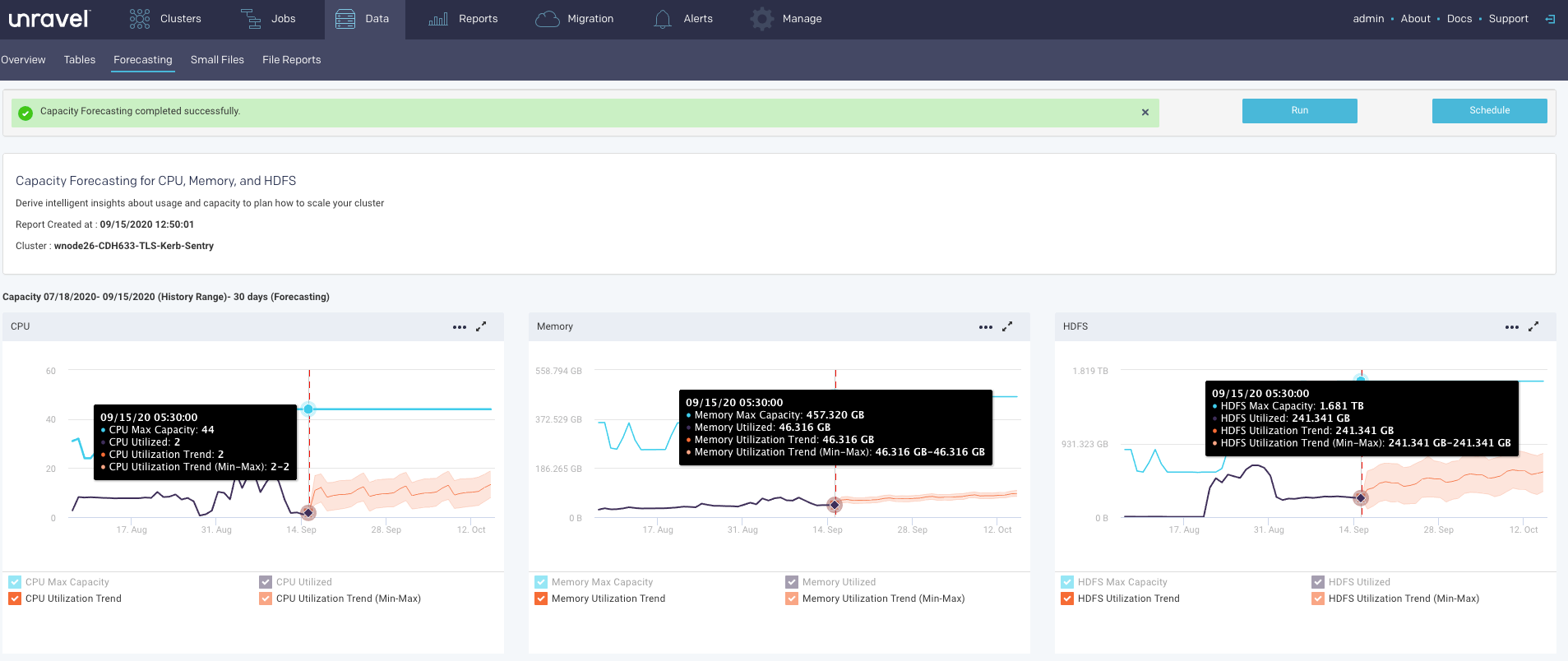

- Forecast capacity with confidence. Run regularly scheduled reports that analyze historical usage and trends to predict future needs.

The observability phase is all about empowering data teams with visibility, precise allocation, real-time budgeting information, and accurate forecasting for cost governance. It provides a 360° view of what’s going on in your cloud environment and how much it’s costing you.

Optimization: Having AI do the heavy lifting

If the observability phase tells you what’s happening and why, the optimization phase is about taking that information to identify where you can eliminate waste, remove inefficiencies, or leverage different instance types that are less expensive without sacrificing SLAs.

In theory, that sounds pretty obvious and straightforward. In practice, it’s anything but. First of all, figuring out where there’s waste is no small task. In an enterprise that runs tens (or hundreds) of thousands of jobs every month, there are countless decisions—at the application, pipeline, and cluster level—to make about where, when, and how to run those jobs. And each individual decision carries a price tag.

And the people making those decisions are not experts in allocating resources; their primary concern and responsibility is to make sure the job runs successfully and meets its SLA. Even an enterprise-scale organization could probably count the number with such operational expertise on one hand.

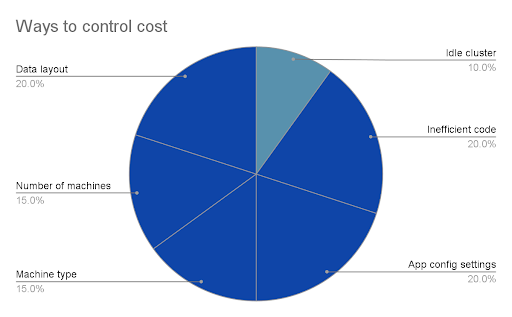

Most cost management solutions can only identify idle clusters. Deployed but no-longer-in-use resources are an easy target but represent only 10% of potential savings. The other 90% lie below the surface.

Digging into the weeds to identify waste and inefficiencies is a laborious, time-consuming effort that these already overburdened experts don’t really have time for.

AI is a must

Yet generating and sharing timely information about how and where to optimize so that individuals can assume ownership and accountability for cloud usage/cost is a bedrock principle of FinOps. This is where AI is mandatory, and only Unravel has the AI-powered insights and prescriptive recommendations to empower data teams to take self-service optimization action quickly and easily.

What Unravel does is take all the full-stack observability information and “throw some math at it”—machine learning and statistical algorithms—to understand application profiles and analyze what resources are actually required to run them vs. the resources that they’re currently consuming.

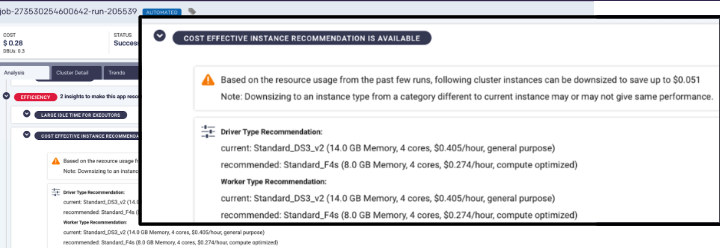

This is where budgets most often go off the rails: overprovisioned (or underutilized) clusters and jobs due to instances and containers that are based on perceived need rather than on actual usage. What makes Unravel unique in the marketplace is that its AI not only continuously scours your data environment to identify exactly where you have allocated too many or oversized resources but gives you crisp, prescriptive recommendations on precisely how to right-size the resources in question.

Check out the 90-second AI Recommendations interactive demo

Check out the 90-second AI Recommendations interactive demo

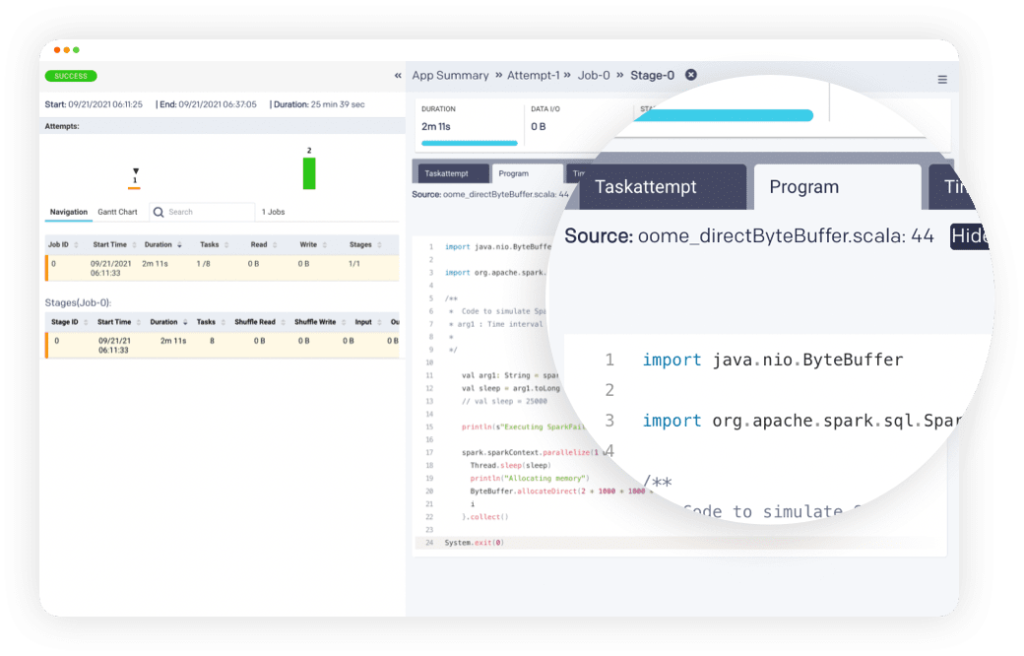

But unoptimized applications are not solely infrastructure issues. Sometimes it’s just bad code. Inefficient or problematic performance wastes money, too. We’ve seen how a single bad join on a multi-petabyte table kept a job running all weekend and wound up costing the company over $500,000. Unravel can prevent this from happening in the first place; the AI understands what your app is trying to do and can tell you this app submitted in this fashion is not efficient—pointing you to the specific line of code causing problems.

Check out the 90-second Code-Level Insights interactive demo

Check out the 90-second Code-Level Insights interactive demo

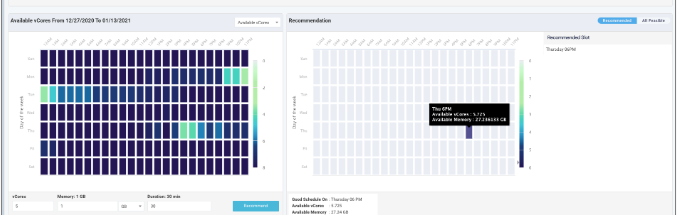

Every cloud provider has auto-scaling options. But what should you auto-scale, to what, and when? Because Unravel has all this comprehensive, granular data in “workload-aware” context, the AI understands trends and usage and access to help you predict and see the days of the week and times of day when auto-scaling is appropriate (or find better times to run jobs). Workload heatmaps based on actual usage make it easy to visualize when, where, and how to scale resources intelligently.

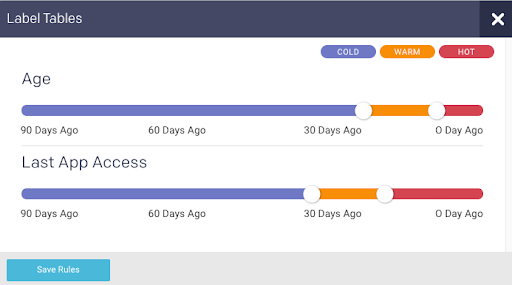

You can save 80-90% on storage costs through data tiering, moving less frequently used data to less expensive options. Most enterprises have petabytes of data, and they’re not using all of it all of the time. Unravel shows which datasets are (not) being used, applying cold/warm/hot labels based on age or usage, so you understand which ones haven’t been touched in months yet still sit on expensive storage.

The optimization phase is where you take action. This is the hardest part of the FinOps lifecycle to put into practice and where Unravel shines. The enormity and complexity of today’s data applications/pipelines comprise hundreds of thousands of places where waste, inefficiencies, or better alternatives exist. Rooting them out and then getting the insights to optimize require the two things no company has enough of: time and expertise. Unravel automatically identifies where you could do better—at the user, job, cluster level—and tells you the exact parameters to apply that would improve costs.

Governance: Going from reactive to proactive

Reducing costs reactively is good. Controlling them proactively is better. Finding and “fixing” waste and inefficiencies too often relies on the same kind of manual firefighting for FinOps that bogs down data engineers who need to troubleshoot failed or slow data applications. In fact, resolving cost issues usually relies on the same handful of scarce experts who have the know-how to resolve performance issues. In many respects, cost and performance are flip sides of the same coin—you’re looking at the same kind of granular details correlated in a holistic workload context, only from a slightly different angle.

AI and automation are crucial. Data applications are simply too big, too complex, too dynamic for even the most skilled humans to manage by hand. What makes FinOps for data so difficult is that the thousands upon thousands of cost optimization opportunities are constantly recasting themselves. Unlike software applications, which are relatively static products, data applications are fluid and ever-changing. Data cloud cost optimization is not a straight line with a beginning and end, but rather a circular loop: the AI-powered insights used to gain contextual full-stack observability and actually implement cost optimization are harnessed to give everyone on the data team at-a-glance understanding of their costs, implement automated guardrails and governance policies, and enable non-expert engineers to make expert-level optimizations via self-service. After all, the best way to spend less time firefighting is to avoid there being a fire in the first place.

- With Unravel, you can set up customizable governance policy-based automated guardrails that set boundaries for any business dimension (looming budget overruns, jobs that exceed size/time/cost thresholds, etc.). Controlling particular users, apps, or business units from exceeding certain behaviors has a profound impact on reining in cloud spend.

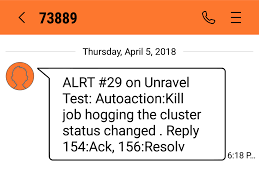

- Proactive alerts can be triggered whenever a guardrail constraint is violated. Alerts can be sent to the individual user—or sent up the chain of command—to let them know their job will miss its SLA or cost too much money and they need to find less expensive options, rewrite it to be more efficient, reschedule it, etc.

- Governance policy violations can even trigger preemptive corrective actions. Unravel can automatically take “circuit breaker” remediation to kill jobs or applications (even clusters), request configuration changes, etc., to put the brakes on rogue users, runaway jobs, overprovisioned resources, and the like.

The governance phase of the FinOps lifecycle deals with getting ahead of cost issues before the fact rather than afterwards. Establishing control in a highly distributed environment is a challenge for any discipline, but Unravel empowers individual users with self-service capabilities that enables them to take individual accountability for their cloud usage, automatically alerts on potential budget-busting, and can even take preemptive corrective action without any human involvement. It’s not quite self-healing, but it’s the next-best thing—the true spirit of AIOps.