Table of Contents

Unravel provides full-stack coverage and a unified, end-to-end view of everything going on in your environment, plus recommendations from our rules-based model and our AI engine. Unravel works on-premises, in the cloud, and for cloud migration.

Unravel provides direct support for platforms such as Cloudera Hadoop (CDH), HortonWorks Data Platform (HDP), Cloudera Data Platform (CDP), and a wide range of cloud solutions, including AWS infrastructure as a service (IaaS), Amazon EMR, Microsoft Azure IaaS, Azure HDInsight, and DataBricks on both cloud platforms, as well as GCP IaaS, Dataproc, and BigQuery. We have grown to supporting scores of well-known customers and engaging in productive partnerships with both AWS and Microsoft Azure.

We have an ambitious engineering agenda and a relatively large team, with more than half the company in the engineering org. We want our engineering process to be as forward-looking as the product we deliver.

We constantly strive to develop adaptive and end-to-end testing strategies. For testing, Unravel had started with a modest customer deployment. We now support scores of large customer deployments with 2000 nodes and 18 clusters. We had to conquer the giant challenges posed by this massive increase in scale.

Since testing is an integral part of every release cycle, we give top priority to developing a systematic, automated, scalable, and yet customizable approach for driving the entire release cycle. As a new startup comes up, the obvious and quickest approach one is tempted to follow is the traditional testing model, and to manually test and certify a module/product.

Well, this structure sometimes works satisfactorily when the features in the product are few. However, a growing customer base, increasing features, and the need for supporting multiple platforms give rise to proportionally more and more testing. At this stage, testing becomes a time-consuming and cumbersome process. So if you and your organization are struggling with the traditional, manual testing approach for modern data stack pipelines, and looking for a better solution, then read on.

In this blog, we will walk you through our journey about:

- How we evolved our robust testing strategies and methodologies.

- The measures we took and the best practices that we applied to make our test infrastructure the best fit for our increasing scale and growing customer base.

Take the Unravel tour

Evolution of Unravel’s Test Model

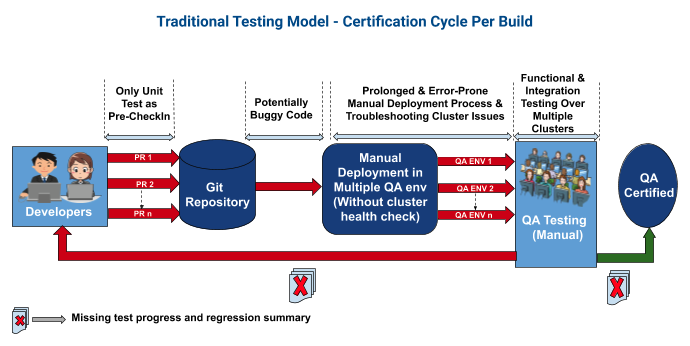

Like any other startup, Unravel had a test infrastructure that followed the traditional testing approach of manual testing, as depicted in the following image:

Initially, with just a few customers, Unravel mainly focused on release certification through manual testing. Different platforms and configurations were manually tested, which took roughly ~4-6 weeks of release cycle. With increasing scale, this cycle became endless, which made the release train longer and unpredictable.

This type of testing model has quite a few stumbling blocks and does not work well with scaling data sizes and product features. Common problems with the traditional approach include:

- Late discovery of defects, leading to:

- Last-minute code changes and bug fixes

- Frantic communication and hurried testing

- Paving the way for newer regressions

- Deteriorating testing quality due to:

- Manual end-to-end testing of the modern data stack pipeline, which becomes error-prone and tends to miss out on corner cases, concurrency issues, etc.

- Difficulty in capturing the lag issues in modern data stack pipelines

- Longer and unpredictable release trains that leads to:

- Stretched deadlines, since testing time increases proportionally with the number of builds across multiple platforms.

- Increased cost due to high resource requirements such as more man-hours, heavily equipped test environments, etc.

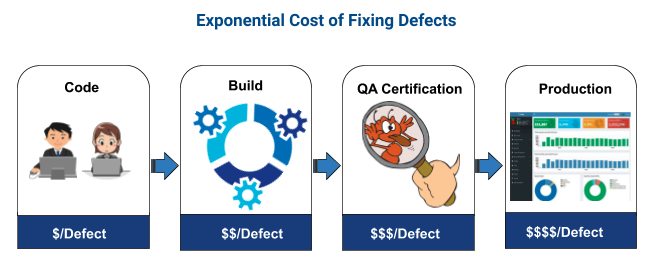

Spotting the defects at a later stage becomes a risky affair, since the cost of fixing defects increases exponentially across the software development life cycle (SDLC).

While the traditional testing model has its cons, it also has some pros. A couple of key advantages are that:

- Manual testing can reproduce customer-specific scenarios

- It can also catch some good bugs where you least expect them to be

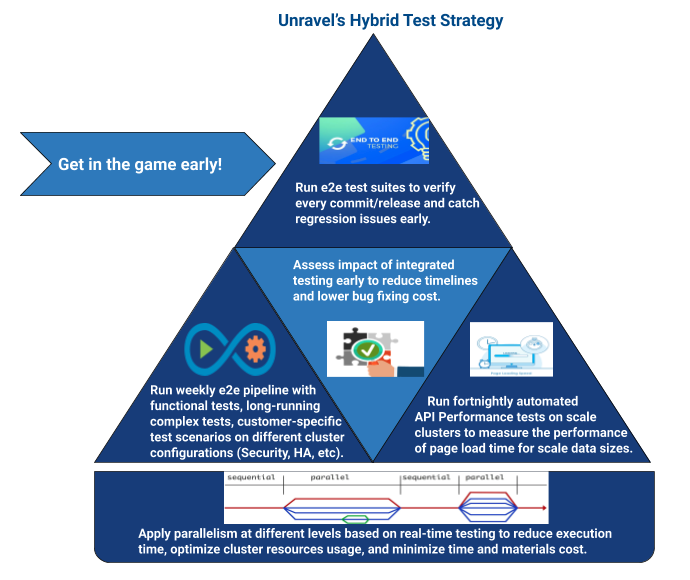

So we resisted the temptation to move fully to what most organizations now implement, a completely mechanized approach. To cope with the challenges faced in the traditional model, we introduced a new test model, a hybrid approach that has, for our purposes, the best of both worlds.

This model is inspired by the following strategy which is adaptive, to scale with a robust testing framework.

Our Strategy

Unravel’s hybrid test strategy is the foundation for our new test model.

New Testing Model

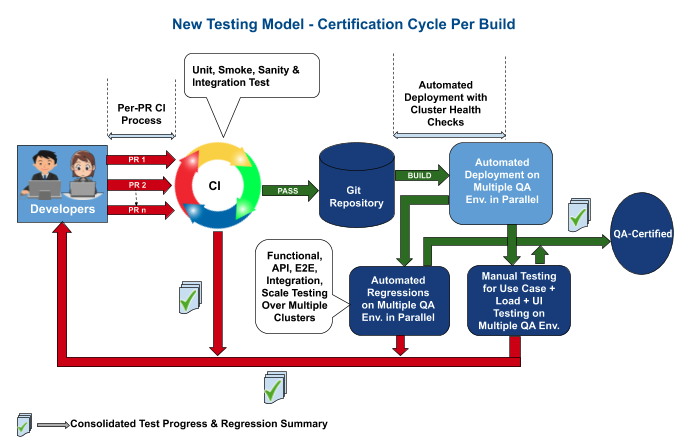

Our current test model is depicted in the following image:

This approach mainly focuses on end-to-end automation testing, which provides the following benefits:

- Runs automated daily regression suite on every new release build, with end-to-end tests for all the components in the Unravel stack

- Provides a holistic view of the regression results using a rich reporting dashboard

- The Automation framework works for all kinds of releases (point release, GA release), making it flexible, robust, and scalable.

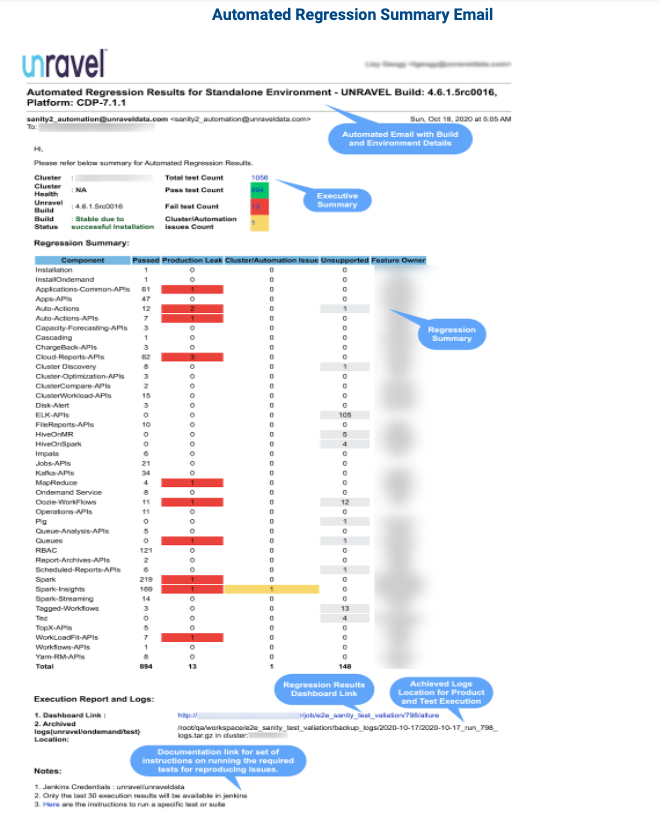

A reporting dashboard and an automated regression summary email are key differentiators of the new test model.

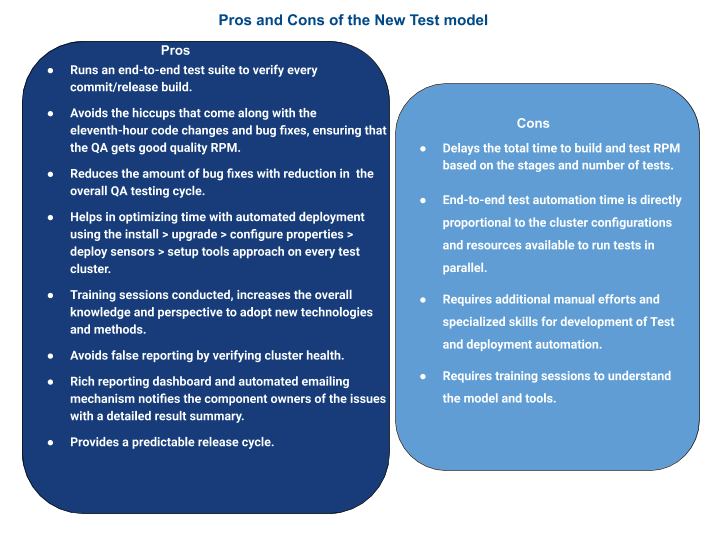

The new test model provides a lot of key advantages. However, there are some disadvantages too.

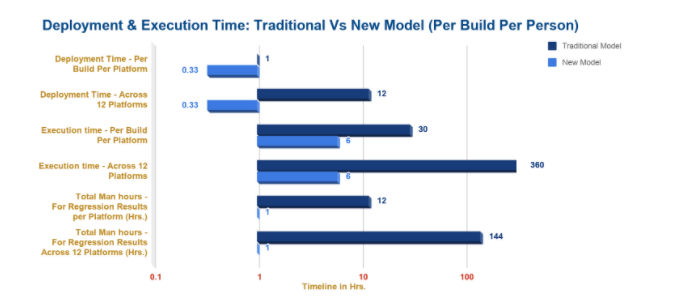

KPI Comparison – Traditional Vs New Model

The following bar chart is derived on the KPI values for deployment and execution time, which is captured for both the traditional as well as the new model.

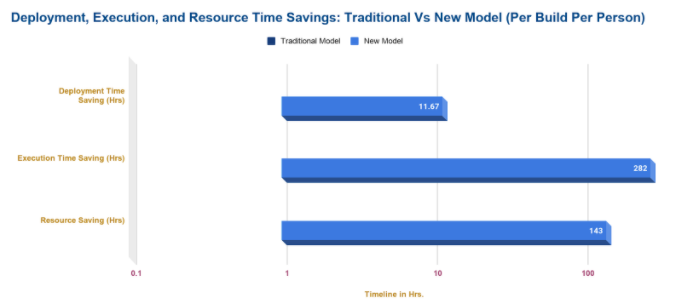

The following graph showcases the comparison of deployment, execution, and resource time savings:

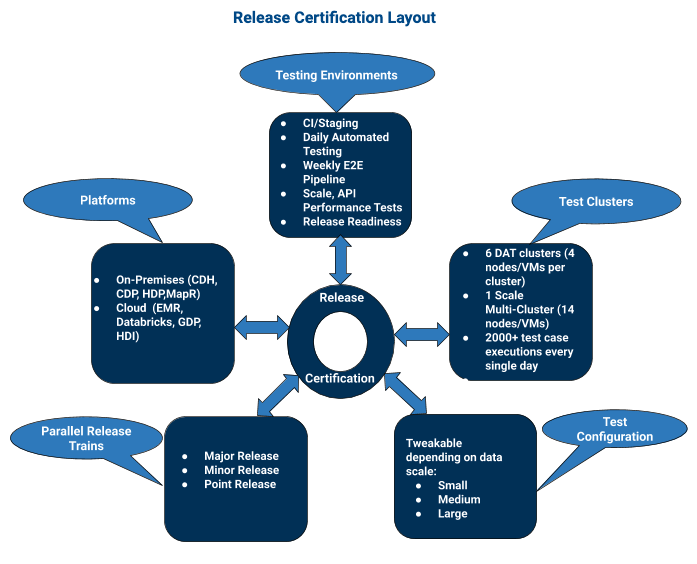

Release Certification Layout

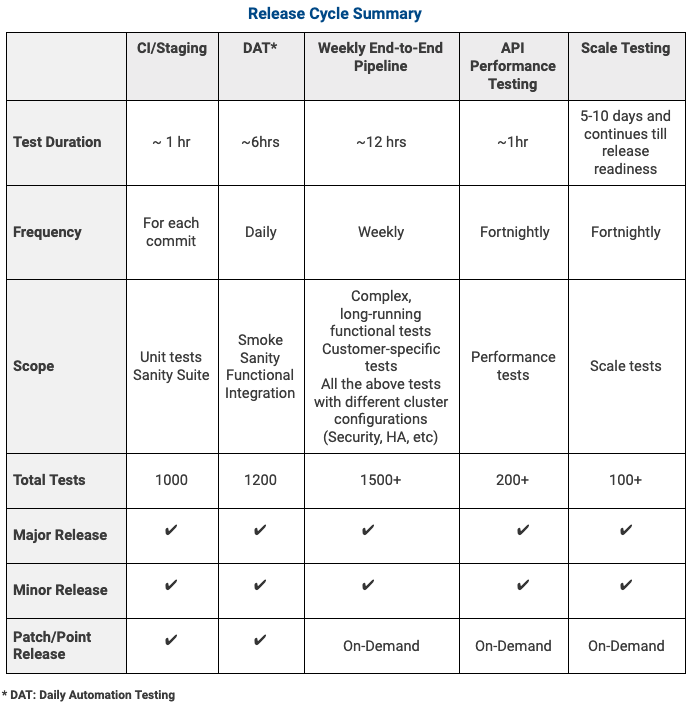

The new testing model comes with a new Release Certification layout, as shown in the image below. The process involved in the certification of a release cycle is summarized in the Release Cycle Summary table.

Release Cycle Summary

Conclusion

Today, Unravel has a rich set of unit tests; more than 1000 tests are run for every commit, along with the CI/CD pipeline in place. This includes functional sanity test cases (1500+) and can cover our end-to-end data pipelines as well as the integration test cases. Such a testing strategy can significantly reduce the impact on integrated functionality by proactively highlighting issues in pre-checks.

Cutting a long story short, It is indeed a difficult and tricky task to build a flexible, robust, and scalable test infrastructure that caters to varying scales, especially for a cutting-edge product like Unravel, and with a team that strives for the highest quality in every build.

In this post, we have highlighted commonly faced hurdles in testing modern data stack pipelines. We have also showcased the reliable testing strategies we have developed to efficiently test and certify modern data stack ecosystems. Armed with these test approaches, just like us, you can also effectively tame the scaling giant!

Reference Links (clip art images)

Unravel’s Hybrid Test Strategy:

- End to End testing: https://www.lambdatest.com/blog/all-you-need-to-know-about-end-to-end-testing/

- Integrated testing: https://professionalqa.com/types-of-integration-testing

- Load testing: https://www.kiwiqa.com/getting-to-know-the-fundamentals-of-performance-testing-a-guide-for-amateurs-and-professionals/

- Weekly E2E pipeline: https://www.pngkit.com/view/u2r5a9y3q8y3r5y3_smart-end-to-end-process-icon/

- Parallelism: https://pages.tacc.utexas.edu/~eijkhout/istc/html/parallel.html (2.6.1.1 The Fork-Join Mechanism)

Exponential Cost of Fixing Defects:

- Developer (Male computer user vector): image:https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcS1E42d7aLu5TRDcxzmpFD2N5b3YGo4E3C2dt1fcGONICav8jTteF-mpOM&s

- Developer (Female computer user vector image): https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcTX-Q6L_n9Bd5zdQ4J3haaO9hkMJKX3Cpg7RY9Ml8bq56YOmXqo2i5rrA&s

- Build: https://icon-library.com/icon/automation-icon-2.html

Unravel’s Test Model:

- QA Testing – Manual (Computer Users): https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcSqI4svILCrc77R2-K0q5jys4PCXDiJFnEVASBiF5A_FNdnSBbdA_kD9Q&s