Table of Contents

At the DataOps Unleashed 2022 virtual conference, AWS Principal Solutions Architect Angelo Carvalho presented How AWS & Unravel help customers modernize their Big Data workloads with Amazon EMR. The full session recording is available on demand, but here are some of the highlights.

Angelo opened his session with a quick recap of some of the trends and challenges in big data today: the ever increasing size and scale of data; the variety of sources and stores and silos; people of different skill sets needing to access this data easily balanced against the need for security, privacy, and compliance; the expertise challenge in managing open source projects; and, of course, cost considerations.

He went on to give an overview of how Amazon EMR makes it easy to process petabyte-scale data using the latest open source frameworks such as Spark, Hive, Presto, Trino, HBase, Hudi, and Flink. But the lion’s share of his session delved into what’s new in Amazon EMR within the areas of cost and performance, ease of use, transactional data lakes, and security; the different EMR deployment options; and the EMR Migration Program.

What’s new in Amazon EMR?

Cost and performance

EMR takes advantage of the new Amazon Graviton2 instances to provide differentiated performance at lower cost—up to 30% better price-performance. Angelo presented some compelling statistics:

- Up to 3X faster performance than standard Apache Spark at 40% of the cost

- Up to 2.6X faster performance than open-source Preston at 80% of the cost

- 11.5% average performance improvement with Graviton2

- 25.7% average cost reduction with Graviton2

And you can realize these improvements out of the box while still remaining 100% compliant with open-source APIs.

Ease of use

EMR Studio now supports Presto. EMR Studio is a fully managed integrated development environment (IDE) based on Jupyter notebooks that makes it easy for data scientists and data engineers to develop, visualize, and debug applications on an EMR cluster without having to log into the AWS console. So basically, you can attach and detach notebooks to and from the clusters using a single click at any time.

Transactional data lakes

Amazon EMR has supported Apache Hudi for some time to enable transactional data lakes, but now it has added support for Spark SQL and Apache Iceberg. Iceberg is a high-performance format for huge analytic tables at massive scale. Created by Netflix and Apple, it brings the reliability and simplicity of SQL tables to big data, while making it possible for engines like Spark, Trino, Flink, Presto, and Hive to work safely in the same tables at the same time.

Security

Amazon EMR has a comprehensive set of security features and functions, including isolation, authentication, authorization, encryption, and auditing. The latest version adds user execution role authorizations, as well as fine-grained access controls (FGAC) using AWS Lake Formation, and auditing using Lake Formation via AWS CloudTrail.

Options for deploying EMR

There are multiple options for deploying Amazon EMR:

- Deployment on Amazon EC2 allows customers to choose instances that offer optimal price and performance ratios for specific workloads.

- Deployment on AWS Outposts allows customers to manage and scale Amazon EMR in on-premises environments, just as they would in the cloud.

- Deployment on containers on top of Amazon Elastic Kubernetes Service (EKS). But note that at this time, Spark is the only big data framework supported by EMR on EKS.

- Amazon EMR Serverless is a new function that lets customers run petabyte-scale data analytics in the cloud without having to manage or operate server clusters.

Create a free account

Using Amazon’s EMR migration program

The EMR migration program was launched to help customers streamline their migration and answer questions like, How do I move this massive data set to EMR? What will my TCO look like if I move to EMR? How do we implement security requirements?

Taking a data-driven approach to determine the optimal migration strategy, the Amazon EMR Migration Program (EMP) consists of three main steps:

1. Assessing the migration process begins with creating an initial TCO report, conducting discovery meetings, and using Unravel to quickly discover everything about the data estate.

2. The mobilization stage involves delivering an assessment insights summary, qualifying for incentives, and developing a migration readiness plan.

3. The migration stage itself includes executing the lift-and shift-migration of applications and data, before modernizing the migrated applications.

Amazon relies on Unravel to perform a comprehensive AI-powered cloud migration assessment. As Angelo explained, “We partner with Unravel Data to take a faster, more data-driven approach to migration planning. We collect utilization data for about two to four weeks depending on the size of the cluster and the complexity of the workloads.

“During this phase, we are looking to get a summary of all the applications running on the on-premises environment, which provides a breakdown of all workloads and jobs in the customer environment. We identify killed or failed jobs—applications that fail due to resource contention and or lack of resources—and bursty applications or pipelines.

“For example, we would locate bursty apps to move to EMR, where they can have sufficient resources every time those jobs are run, in a cost-effective way via auto-scaling. We can also estimate migration complexity and effort required to move applications automatically. And lastly, we can identify tools suited for separate clusters. For example, if we identify long-running batch jobs that run at specific intervals, they might be good candidates for spinning a transient cluster only for that job.”

Unravel is equally valuable during and after migration. Its AI-powered recommendations for optimizing applications simplifies tuning and its full-stack insights accelerate troubleshooting.

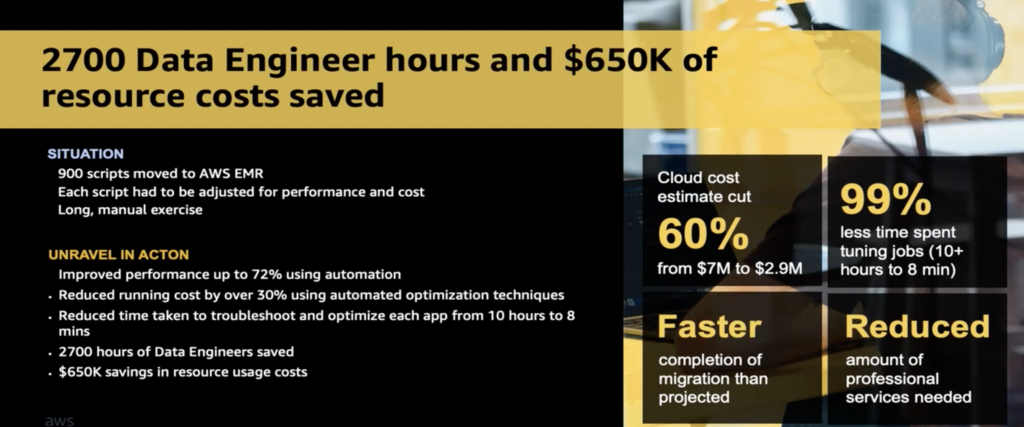

To illustrate, Angelo concluded with an Amazon EMR-Unravel success story: GoDaddy was moving 900 scripts to Amazon EMR, and each one had to be optimized for performance and cost in a long, time-consuming manual process. But with Unravel’s automated optimization for EMR, they spent 99% less time tuning jobs—from 10+ hours to 8 minutes—saving 2700 hours of data engineering time. Performance improved by up to 72%, and GoDaddy realized $650,000 savings in resource usage costs.

Watch on demand