Earlier this year, Unravel released the results of a survey that looked at how organizations are using modern data apps and general trends in big data. There were many interesting findings, but I was most struck by what the survey revealed about security. First, respondents indicated that they get the most value from big data when leveraging it for use in security applications. Fraud detection was listed as the single most effective use case for big data, while cybersecurity intelligence was third. This was hardly surprising, as security is at the top of everyone’s minds today and modern security apps like fraud detection rely heavily on AI and machine learning to work properly. However, despite that value, respondents also indicated that security analytics was the modern data application they struggled most to get right. This also didn’t surprise me, as it reflects the complexity of managing the real-time streaming data common in security apps.

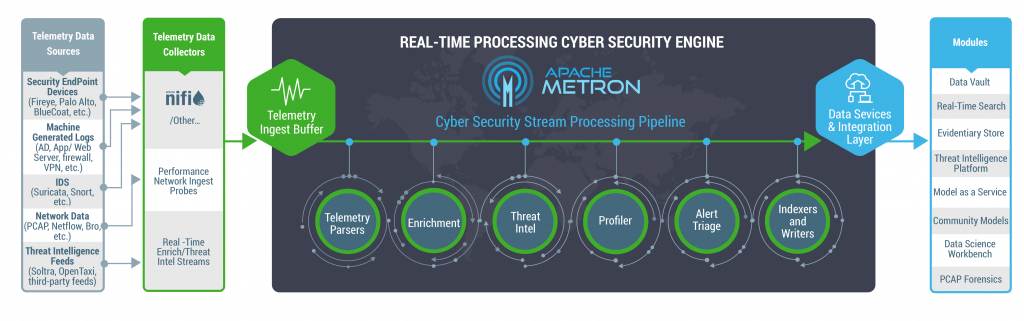

Cybersecurity is difficult from a big data point of view, and many organizations are struggling with it. The hardest part is managing all of the streaming data that comes pouring in from the internet, IoT devices, sensors, edge platforms and other endpoints. Streaming data comes often, piles up quickly and is complex. To properly manage this data and deliver working security apps, you need the right solution that provides trustworthy workload management for cybersecurity analytics and offers you the ability to track, diagnose, and troubleshoot end-to-end across all of your data pipelines.

Real-time processing is not a new concept, but the ability to run real-time apps reliably and at scale is. The development of open-source technologies such as Kafka, Spark Streaming, Flink and HBase have enabled developers to create real-time apps that scale, further accelerating their proliferation and business value. Cybersecurity is critical for the well-being of enterprises and large organizations, but many don’t have the right data operations platform to do it correctly.

To analyze streaming traffic data, generate statistical features, and train machine learning models to help detect cybersecurity threats on large-scale networks, like malicious hosts in botnets, big data systems require complex and resource-consuming monitoring methods. Security analysts may apply multiple detection methods simultaneously, to the same massive incoming data, for pre-processing, selective sampling, and feature generation, adding to the existing complexity and performance challenges. Keep in mind, the applications often span across multiple systems (e.g., interacting with Spark for computation, with YARN for resource allocation and scheduling, with HDFS or S3 for data access, with Kafka or Flink for streaming) and may contain independent, user-defined programs, making it inefficient to repeat data pre-processing and feature generation common in multiple applications, especially in large-scale traffic data.

These inefficiencies create bottlenecks in application execution, hog the underlying systems, cause suboptimal resource utilization, increase failures (e.g., due to out-of-memory errors), and more importantly, may decrease the chances to detect a threat or a malicious attempt in time.

Unravel’s full stack platform addresses these challenges and provides a compelling solution for operationalizing security apps. Leveraging artificial intelligence, Unravel has introduced a variety of capabilities for enabling better workload management for cybersecurity analytics, all delivered from a single pane of glass. These include:

- Automatically identifying applications that share common characteristics and requirements and grouping them based on relevant data colocation (e.g., a combination of port usage entropy, IP region or geolocation, time or flow duration)

- Recommendations on how to segregate applications with different requirements (e.g., disk i/o heavy preprocessing tasks vs. computational heavy feature selection) submitted by different users (e.g., SOC level 1 vs. level 3 analysts)

- Recommendations on how to allocate applications with increased sharing opportunities and computational similarities to appropriate execution pools/queues

- Automatic fixes for failed applications drawing on rich historic data of successful and failed runs of the application

- Recommendations for alternative configurations to get failed applications quickly to a running state, followed by getting the application to a resource-efficient running state

Security apps running on a highly distributed modern data stack are too complex to monitor and manage manually. And when these apps fail, organizations don’t just suffer inconvenience or some lost revenue. Their entire business is put at risk. Unravel ensures these apps are reliable and operate at optimal performance. Ours is the only platform that makes such effective use of AI to scrutinize application execution, identify the cause of potential failure, and to generate recommendations for improving performance and resource usage, all automatically.